NHTSA Complaint Update: First Tesla Accident Linked To Full Self-Driving Vehicle

According to an NHTSA complaint, a Telsa Model Y equipped with FSD was "severely damaged" after turning into the wrong lane and colliding with another vehicle.

Saturday, November 13, 2021 | Chimniii Desk

Key Highlights

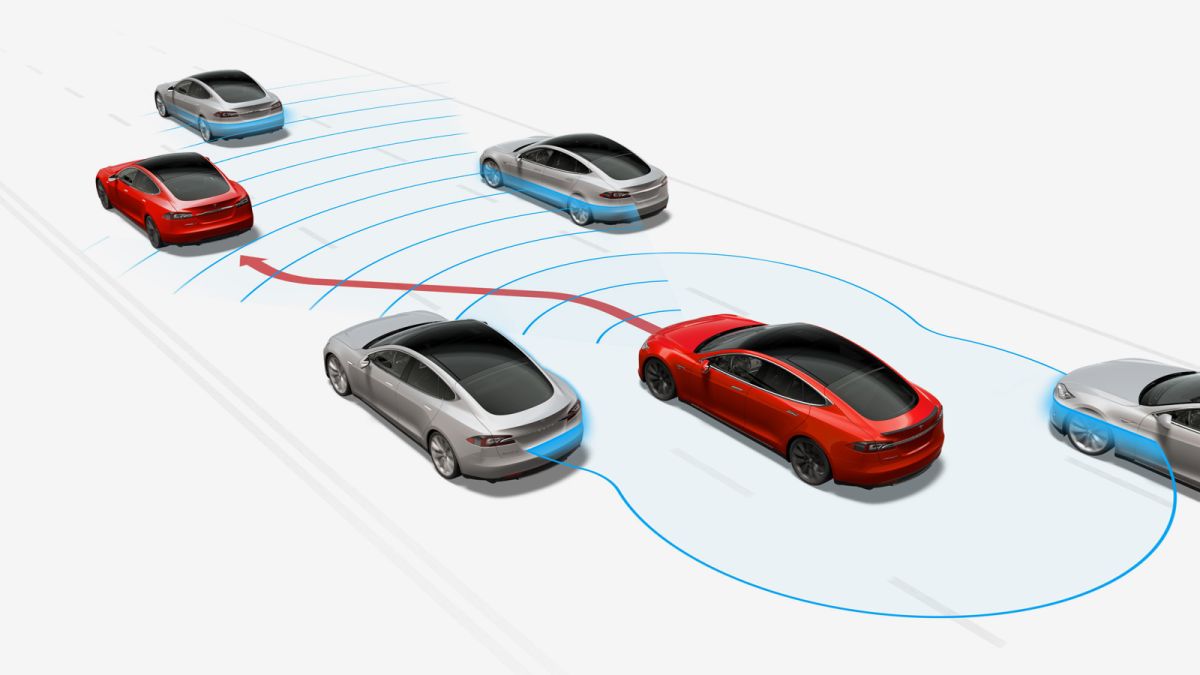

- A new complaint filed with the National Highway Traffic Safety Administration (NHTSA) outlines what appears to be the first serious incident involving a Tesla Model S equipped with Full Self-Driving (FSD) beta technology.

- The Verge obtained a copy of the crash report, which comes just one week after the manufacturer was compelled to recall 11,704 automobiles.

- In a late October interview with CNBC, Homendy rebutted those complaints, claiming that Tesla's portrayal of FSD as "self-driving" was "misleading" and may have encouraged customers to use the service irresponsibly—that is, to let the car drive itself.

- As a reminder, Tesla has revealed that its FSD achieves only Level 2 autonomy on a scale of 1 to 5.

Advertisement

A new complaint filed with the National Highway Traffic Safety Administration (NHTSA) outlines what appears to be the first serious incident involving a Tesla Model S equipped with Full Self-Driving (FSD) beta technology. The Verge obtained a copy of the crash report, which comes just one week after the manufacturer was compelled to recall 11,704 automobiles. Due to an FSD-related hiccup.

According to the study, the collision occurred when a Model Y vehicle in FSD mode entered the wrong lane in Brea, California, on November 3. The Tesla was then struck, resulting in "serious damage" to the driver's side of the vehicle. According to the report, no one was wounded in the incident.

Media reached out to Tesla and the NHTSA for comment on the allegation but did not receive a response.

All year, automotive safety experts and authorities have raised alarms about the safety risks associated with Full Self-Driving. Consumer Reports reported in July that the FSD lacked proper safeguards, causing Tesla cars to miss curves, scrape against shrubs, and in some cases, hurl towards parked automobiles. Consumer Reports stated that FSD safety flaws posed a threat to not only Tesla drivers, but also pedestrians, cyclists, and other motorists.

Only a few months later, Chairwoman Jennifer Homendy of the US National Transportation Safety Board chastised the firm for allowing drivers to request access to the service prior to resolving what the agency deemed to be "design deficiencies." In a late October interview with CNBC, Homendy rebutted those complaints, claiming that Tesla's portrayal of FSD as "self-driving" was "misleading" and may have encouraged customers to use the service irresponsibly—that is, to let the car drive itself.

As a reminder, Tesla has revealed that its FSD achieves only Level 2 autonomy on a scale of 1 to 5. To date, Democratic Senators Richard Blumenthal and Edward Markey have petitioned the Federal Trade Commission to investigate whether Tesla's FSD classification constitutes misleading advertising.

All of this is to argue that it was only a matter of time before a serious crash using FSD occurred, which Musk admitted earlier this year in a tweet in which he urged drivers to "be paranoid," recognising that the FSD beta will introduce "unknown difficulties."

Advertisement

The critical question at this point is what follows next. All indications have been that there will be a greater appetite for driver assistance and autonomous car regulation this year, but nothing substantial has materialised to date. It's almost certain that we'll witness additional accidents with FSD similar to the one described here when the beta grows to new audiences. Any potential harm caused by these could be sufficient to compel regulators to intervene.

Advertisement