STARLINK IS A BIG DEAL. KNOW WHY

Starlink, the proposal for SpaceX to service the internet

through tens of thousands of satellites, is a staple in the space news, with

storeys on the latest developments appearing every week. The large schema is

clear and a reasonably well motivated person (such as your humble servant) can

deduce a great deal of information thanks to filings with the FCC. Despite

this, even among expert analysts, there is still an exceptionally high degree

of uncertainty about this new technology. Reading papers comparing Starlink to

OneWeb and Kuiper (among others) is not unusual, as though they were all

comparable rivals. It is not unusual to read about well-meaning astronomy

concerns about space junk, space law , regulation, and harm. It is my hope that

the reader will be both better educated and more inspired by Starlink by the

end of this very long article.

SpaceX is at the helm of competing launch providers and, at

the same time, offers a mechanism for space restructuring. The subtext here is

that the traditional satellite industry was unable to keep up with the steadily

growing capability and reducing cost of SpaceX on the Falcon launch family,

putting SpaceX in a challenging role. On the one side, it saturated a market

worth a few billion a year, at most. And on the other hand, to create a massive

rocket with almost no paying customers, and then to launch thousands of them to

Mars with no immediate economic return, the insatiable appetite for cash was

developing.

Starlink is the solution to those twin issues. Through creating

their own satellites, SpaceX could build and define a new market for highly

capable, democratised access to space communication, provide their own rocket

with a revenue stream and payload, even as they cannibalise themselves, and

ultimately unlock trillions of economic value. Do not underestimate the size of

the ambition of Elon. Only a few trillion dollar industries exist: oil,

high-speed transportation , communications, chemicals, IT, health ,

agriculture, government, defence. Space mining, lunar water, and space-based

solar power are not feasible industries, despite common myths. Elon has a power

play with Tesla, but communications alone offers a reliable, deep satellite and

launch market.

The first space-related proposal for Elon Musk was to spend

$80 m on a philanthropic mission to develop a plant on a lander on Mars. It

would cost maybe 100,000 times as much to create a Mars city. Starlink is the

key bet for Elon to produce the ocean of gold needed to create a

self-sustaining city on Mars philanthropically.

In 2012, when SpaceX realised that its clients, mainly

comsat providers, had better margins than they did, Starlink was conceptually

born. In order to put satellites in space, launch providers charge notoriously

insane prices, and yet somewhere there was a piece of the action they had

missed? Elon dreamt of an internet constellation and got the ball rolling,

unable to avoid a near-impossible technological obstacle. The creation phase of

Starlink has had its difficulties, but at the end of this article, you, the

reader, will probably be shocked at how few problems there have been,

considering the magnitude of the underlying vision.

Why do we need to supply the Internet with an immense

constellation of satellites? And Why now?

The internet has grown from scholarly curiosity to the

single most disruptive piece of technology ever created in my lifetime. This is

not the focus of an extended internet debate, but I would assume that global

internet demand and the wealth it brings will continue to rise rapidly by about

25 percent a year.

But today, from a tiny handful of geographically-isolated

monopolies, almost all of us get our internet. In the US, the nation has been

carved up by AT&T, Time Warner, Comcast, and a handful of smaller players

to discourage competition, charge exorbitant prices for poor service, and bask

in near universal hate.

There is a compelling explanation for anti-competitive

behaviour among internet service providers, in addition to overwhelming greed.

The internet's underlying infrastructure, microwave cell towers and optical

fibre, are incredibly costly to instal. Just how miraculous the internet's

data-transfer properties are is easy to forget. My grandmother's first job

during the Second World War as a Morse code operator was a medium that fought

for preeminent strategic importance with homing pigeons! It is so disembodied,

so incorporeal for most of us to travel the knowledge superhighway, that we

neglect that those bits have to cross our physical universe with all its

borders, rivers , mountains, seas, hurricanes, natural disasters, and other

annoyances.

What we need is a way to raise the flow of data from the

turbulent surface of Earth and into space, where in 5 years, a satellite would

easily orbit the Earth 30,000 times. This seems obvious — so why didn't anybody

try it before?

In the early 1990s,

the Iridium constellation invented and implemented by Motorola (remember them?)

was the first global LEO-based communications network. Its niche ability to

route tiny data packets from asset trackers turned out to be its main use by

the time it was introduced, as cellular phones were inexpensive enough that the

use of satellite phones never took off. In 6 separate orbital planes, the

Iridium network had 66 satellites (plus a few spares)-the minimum amount required

to fully cover the globe.

If 66 satellites are enough for Iridium, why are tens of

thousands being designed by SpaceX? What makes SpaceX different?

SpaceX came from the other way, from launch, to this

venture. As a result of their groundbreaking efforts in booster recovery, their

cheap launch has absolutely cornered the market. There is not much money to be

made to undercut their competition excessively, so being their own customer is

the best way to leverage their excess ability. Since SpaceX is able to launch

its satellites at about a tenth of the price (per kg) of the original Iridium

constellation, they are capable of tackling a far more inclusive market.

The world-wide internet from Starlink would offer

high-quality internet connectivity to every corner of the world. Internet

availability would depend, for the first time, not on how close a specific

country or city comes to a strategic fibre path, but on whether it can see the

sky. Unlike their own variously inept and/or corrupt government telecom

monopolies, entrepreneurs worldwide will have free access to the global

internet. The monopoly-breaking potential of Starlink would catalyse tremendous

positive change, taking billions of people into our future global cyber culture

for the first time.

If I can deviate from a paragraph, what does that mean?

For people who are growing up today in an age of ubiquitous

access, the internet is like the air we breathe. It's still there. But that's

to forget its immense ability to bring about positive change – a change that

we're in the midst of right now. The Internet is capable of helping people keep

politicians accountable, connect with people elsewhere, exchange ideas, create

new things, and unify the human race. The history of modernity is one of

expanded human data sharing capabilities. First of all, by speeches and epic

poetry. Then write, allowing the dead to communicate to the living, data

storage, and asynchronous communication. The printing press, which allowed the

mass production of knowledge. Electronic correspondence, which hastened the

passage of data around the globe. From notebooks to mobile phones, our personal

note taking devices have gradually become more sophisticated, each of which is

an internet-connected machine festooned with sensors and increasingly able to anticipate

our needs intelligently.

As a cognitive aid, a person capable of using writing and

computers is substantially more capable of transcending the limitations of

their poorly formed wetware. What's more exciting is that humans have both a

good note-keeping system in mobile phones and a mechanism for exchanging

thoughts with other people. Although people may have relied on speech

historically to express the ideas they have written in their notebooks, the

trend today is for notebooks to share the ideas created by humans, an inversion

of the conventional schema. A type of collective metacognition is the logical

extension of this process, mediated by personal devices ever-more closely

integrated with our brains and each other.

While there is space in this world to be nostalgic for the

diminution of our connection to nature and the loss of solitude, it is

important to remember that technology, and technology alone is responsible for

the vast majority of humanity’s emancipation from the “natural” cycles of ignorance,

premature avoidable death, violence, malnutrition, and tooth decay.

How?

Let 's address the business case and design architecture of

Starlink.

In order for Starlink to be a good company, its profits

needs to surpass its construction and operating costs. Satellite firms

historically have front-loaded expenses in capex, using advanced specialist

funding and insurance mechanisms to launch a satellite. The design and launch

of a geostationary communications satellite will cost $500 m and take 5 years,

but the industry works similarly to the construction of a jet or container

ship. With a relatively cheap operating budget, large outlays and barely enough

sales to cover funding costs. The biggest drawback of the original

constellation of Iridium, on the other hand, was that Motorola forced the

operator to pay ruinous licencing fees, bankrupting the whole effort within

months.

Traditional satellite companies have had to serve specialist

clients and charge high prices for their data in order to operate these

companies. Despite the poor latency and relatively low bandwidth of a satellite

link, airlines, remote outposts, aircraft, war zones and vital infrastructure

pay about $5 / MB, which is 5000 times higher than the cost for a conventional

ADSL connexion.

Starlink aims to compete with commercial terrestrial ISPs,

so it must be capable of delivering data for less than $1 / GB, preferably even

less. Is it possible here? Or rather, we can wonder, if it is possible, how is

this possible?

A cheap start is the first ingredient in the mix. Currently,

Falcon offers launches for up to 24 T for about $60 m, which works out to be

$2500 / kg. However, this is a lot more than their internal marginal expense.

On several reflown boosters, Starlink satellites are to be deployed, so the

marginal cost per launch is the cost of a new second stage (maybe $4 m),

fairings ($1 m) and ground handling (~$1 m). This works out to be more than

1000 times cheaper than a traditional comsat launch, about $100k per satellite.

However, most of Starlink will be published on Starship.

Indeed, as shown by updated FCC filings, the history of the Starlink

constellation gives us some insight into how the internal architecture formed

when Starship became real. The constellation 's total number of satellites rose

from 1584 to 2825 to 7518 to 30,000. Or, if you think that the sum is

accumulating, still more. For the first step of growth, the minimum viable

number of satellites is 6 out of 60 planes (360), with 24 out of 60 planes

(1440) required for complete coverage within 53 degrees of the equator. 24

Falcon launches could cost as little as $150 m internally. The Starship, on the

other hand, is planned to launch up to 400 satellites at a time and at a comparable

cost per launch. After five years, Starlink satellites are planned to be

replaced, so 15 Starship launches a year are needed for 6000 satellites. This

could cost as little as $100 million a year, or $15 thousand per satellite.

While each of the Falcon-launched satellites weighs 227 kg,

while carrying third-party instruments, the Starship-launched satellites could

weigh as much as 350 kg and be somewhat larger without exceeding the launcher

's capacity.

What's the expense of satellites? The Starlink Sats are

somewhat peculiar as satellites go. They are incredibly simple to produce in

mass, constructed, stacked and launched in a flat configuration

(stackellites?). The manufacturing cost goal should, as a rule of thumb, be

about the same as the unit launch cost. A large cost difference will be a sign

of misallocated engineering capital, as the marginal cost enhancement to

savings on the cheapest side is too minimal. Does $100k for the first few

hundred sound fair per satellite? Is a Starlink satellite, in other words, of

similar complexity to a car?

To answer this question fully, we need to understand why,

despite not being a thousand times as complicated, a geostationary comsat could

cost a thousand times as much. More generally, why does hardware for space cost

so damn much? There are many explanations for this, but the most popular in

this case is that if the geostationary launch (pre Falcon) costs more than $100

m, to make any money, the satellite needs to work reliably for many years. It

is a painful, drawn out process that takes years and requires hundreds of

people to ensure a high likelihood of operability on the first and only paper.

Costs add up and if the launch is that costly anyway, it's easy to justify

additional procedures.

On the other hand, by designing hundreds of satellites,

iterating rapidly on early design defects, and using mass manufacturing methods

to control costs, Starlink violates this model. Personally, I have no trouble

imagining a Starlink manufacturing line where a technology can integrate and

zip all together in an hour or two, maintaining the necessary replacement rate

of 16 satellites per day. There are many fancy components in a Starlink

satellite, but I see no reason why the overall production cost of the

thousandth unit off the line does not fall to $20k. Indeed, Elon tweeted in May

that the cost of satellite development was already less than the cost of

launch.

Let's select an intermediate scenario, using round numbers,

to analyse "time to revenue." For five years, a single Starlink

satellite costing $100k to build and launch can work. Is it going to pay for

itself, and how fast?

A Starlink satellite will complete 30,000 orbits in five

years. The satellite would spend much of its time in each of these 90-minute

orbits over the uninhabited ocean, and maybe just 100 seconds over a densely

populated area. It can transfer data and receive revenue as quickly as it can

during that brief window. Assuming 100 separate beams can be supported by the

antenna, and each beam can transmit at 100 MB per second using advanced coding

such as 4096QAM, the satellite generates revenue per orbit of $1000, assuming a

$1 / GB subscriber cost. This is sufficient in just a week to earn the $100k

deployment expense, significantly simplifying the structure of the money. The

remaining 29,900 orbits, after fixed costs are accounted for, are benefit.

These assumptions will obviously differ a lot in either

direction. But in any case, a significant business opportunity is provided by

being able to deliver a competent communications constellation to LEO for

$100k, or even $1 m, per unit. A Starlink satellite will produce 30 PB of data

over its lifetime at an amortised cost of $0.003 / GB even taking into account

its ludicrously low utilisation fraction, with virtually no marginal cost

increase for transmission over a longer distance.

Let's quickly compare it to two other models for consumer

data distribution to understand how convincing this model is: traditional

optical fibre-based, and a satellite constellation provided by a company that

doesn't specialise in launching.

The SEA-WE-ME 4 is a major submarine cable which was

commissioned in 2005 and runs from France to Singapore. It can transmit 1.28Tb

/ s, and it costs around $500 m to deploy. If it runs for 10 years, equal to

100% capacity, with a 100% capital cost overhead, then the price per bit works

out to be $0.02 / GB. Transatlantic cables are shorter and a little cheaper,

but in a long line of people who need money to deliver data, the undersea cable

is only one person. For Starlink, the middle road calculation is 8 times

cheaper, all in, than just an undersea cable.

How will it be possible? All the complex electronic

switching gear found linking optical fibres together is included in each

Starlink satellite, but they simply use empty vacuum instead of a costly and

fragile cable to carry data. Transmitting information across space dis-mediates

all the cosy moribund monopolies and enables customers to communicate with far

fewer hardware in between.

Let's contrast that with OneWeb, a rival constellation

creator. OneWeb aims to launch a constellation of approximately 600 satellites

with a variety of commercial suppliers for about $20,000 / kg. Each satellite

would weigh 150 kg, suggesting a launch cost of about $3 m for the best case

unit. The cost of satellite hardware is forecast at $1 m per, with a total cost

of constellation production of $2.6b by 2027. A peak data rate of 50 MB / s,

preferably per each of the 16 beams, was demonstrated by the OneWeb test

satellites. We find that each OneWeb satellite produces $80 / orbit revenue and

a total of $2.4 million in 5 years , following the same process as the Starlink

estimate above, perhaps only covering launch costs when data services to remote

areas are included. This averages out to be approximately $1.70 / GB.

Starlink is 17x cheaper or faster than OneWeb, which would

mean a comparable cost of $0.10 / GB, Gwynne Shotwell was recently quoted as

saying. This refers to the cost of data distribution in the initial

configuration of Starlink, with less configured manufacturing, launch of

Falcon, and minimal data offerings only in the northern USA. It turns out that

SpaceX has an insurmountable advantage over the competition: a more capable

satellite can be launched today for 15 times less per unit. In fact , it is

possible to imagine SpaceX launching 30,000 satellites by 2027 for a total cost

of less than $1b, most of which will be self-funded by that point. Starship

will lift this to a factor of 100 or more.

For OneWeb and other optimistic constellation creators, I 'm

sure there is an analysis that is less grim, but I'm not quite certain how it

will be organised.

Recently, Morgan Stanley estimated that Starlink satellites

would each cost $1 million to build and $830k to launch, a figure described by

Gwynne Shotwell as' waaaaayyyy off.' I find it curious that the projected costs

are close to our OneWeb guess, but approximately 10 times the original version

of Starlink 's calculation. Starship and satellite mass production usage could

reduce the cost of satellite deployment to about $35k per unit, an incredibly inexpensive

number.

One final point is to compare Starlink 's revenue per watt

of solar power generated. According to photographs on the website, each

satellite's solar array is about 60 sqm, which means they generate an average

of around 3kW, or 4.5kWh, over an entire orbit. With a ballpark estimate of

$1000 in revenue per orbit, around $220 / kWh is produced by each satellite.

This is 10,000 times the wholesale cost of electricity generated by solar,

showing once again that a losing proposition is space-based solar power. It is

a huge value-add to modulate microwaves with data!

Architecture

In the previous section, I glossed over a significantly

non-trivial aspect of the Starlink architecture; the way in which it deals with

the decidedly non-uniform population density of humans. Every Starlink

satellite can produce concentrated beams that produce spots on the Earth's

surface. Both subscribers within a single beam spot need to share the

bandwidth. The size of this spot is determined by fundamental physics – its

width is basically (satellite altitude x microwave wavelength / antenna

diameter) which, for Starlink satellites, is planned to be at best a few

kilometres long.

Many cities have a population density of about 1000 people

per square kilometre, although some are much higher. More than 100,000

residents in parts of Tokyo or Manhattan may be within the footprint of a

single beam. Fortunately, there is a dynamic broadband wholesale market in all

such high density areas, not to mention highly developed mobile phone networks.

Furthermore, when a highly designed constellation has several satellites

overhead at any one time , the data rate can be improved by spatial separation

as well as by frequency allocation. In other words, hundreds of satellites

might aim their most effective beam to the same area, and subscribers in that

area would use ground terminals that would break the demand between those

satellites.

Although remote, rural and suburban areas will provide the

most important market for the initial stages of the constellation, further

launches will be financed entirely by offering better service to high density

cities. This is the opposite of the more traditional business expansion

scenario, where competitive city-based services ultimately experience

diminishing margins as they continue to expand outwards into poorer, sparser

regions.

After the data has been produced from the satellite

subscriber, what happens to it? Satellites transmit this data directly back to

dedicated ground stations near the service areas in the initial version of

Starlink. "Bent pipe" is the name of this configuration. In the future,

Starlink satellites will add the capacity to use lasers to communicate with

each other. Satellites would be at full capacity over dense towns, but data

will spread over two dimensions via the laser network. In practise, this

implies that the satellite network has immense latent backhaul power, so

subscriber data can be "regrounded" anywhere it fits. I expect, in

practise, that SpaceX ground stations would be collocated outside metropolitan

centres with carrier hotels.

In situations where the satellites are not asking, it turns

out that satellite to satellite communication is non-trivial. The most recent

FCC filing provides 11 satellite families with different orbits. Surrounding

satellites are travelling at the same altitude, inclination, and eccentricity

within a given family, meaning that the laser links can reasonably easily track

the satellites immediately surrounding them. But the closing speeds between

families are measured in km / s, so interfamily contact, if any, must be

conducted with short-lived fast-steered microwave connexions.

The topology of these families of orbits is super esoteric

and not really important to the business case, but I find them beautiful, so

I'm going to include them. Skip to "Fundamental physical limits"

below if you're not that excited about this segment.

The mathematical entity identified by two radii is a torus,

or donut. Drawing circles on the surface of a torus, either parallel or

perpendicular to the form, is very trivial. It which interest you to discover

that on the surface of a torus, both passing through the central hole and

around the outside of the torus, there are two other families of circles that

can be drawn. This pattern is known as the circles of Villarceaux, and I used

it when I designed the toroid for the Coup de Foudre 2015 Burning Man tesla

coil.

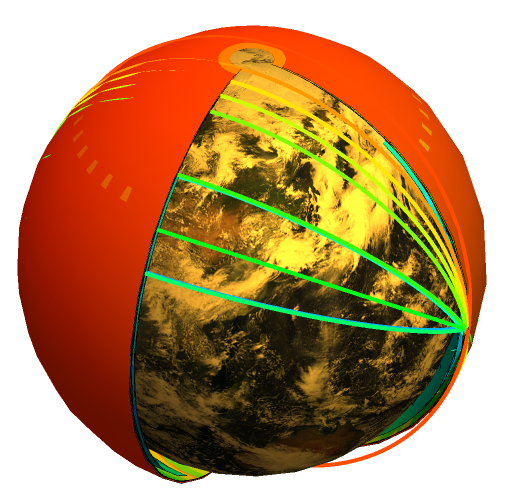

While orbits are usually ellipses, not circles, in the case

of Starlink, a comparable construction applies. In a sequence of orbital

planes, all at common inclination, a group of 4500 satellites form a

continuously moving sheet over the Earth's surface. At a given latitude, the

northward moving sheet turns around and heads south once more. The orbits would

be very slightly eccentric in order to prevent collisions, so that the

northward moving sheet will be a few kilometres higher (or lower) than the

southern moving sheet. Together, as illustrated in this exaggerated

illustration, the two sheets form a blown out torus formation.

Recall the contact is between neighbouring aspiring

satellites inside this torus. In general, as their closing speeds are too high

to reliably detect with a laser, there are no long-lived contact ties directly

between satellites in the northward moving and southward moving sheets. Then,

the route for transmitting data between sheets goes across the top or bottom of

the torus.

In particular, 30,000 satellites with a large gap across the

orbit of the International Space Station will be located in 11 nesting tori!

This diagram illustrates, without exaggerated eccentricity, how all these

sheets stack up.

Finally, the optimum flight altitude is worth considering.

There is a trade-off between low altitude, allowing greater beam sizes and

higher data speeds, and high altitude, allowing the entire World to be reached

by fewer satellites. Over time, the FCC filings of SpaceX have trended towards

lower altitudes, especially as the growth of Starship allows the rapid

deployment of larger constellations possible.

Low altitude has other benefits, including a substantially

decreased risk of collision with debris or detrimental effects of hardware

failure. The lowest flying Starlink satellites (330 km) will burn up in a

matter of weeks following loss of orientation power because of increased

atmospheric drag. Indeed, 330 km is smaller than almost all other satellites,

and the use of the built-in Krypton electric thruster as well as a compact

configuration would be sufficient to sustain altitude. In theory, a

sufficiently pointy electrically propelled satellite might stably orbit as low

as 160 km, but when there are a few other data rate-increasing tricks to try, I

don't expect SpaceX to travel that low.

Fundamental spatial borders

Although it seems unlikely that satellite deployment costs

will ever drop well below $35k, the ultimate physical limitations on satellite

efficiency are less obvious, even with sophisticated digital production and

entirely reusable Starships. Centered on the round numbers of 100 beams each

capable of transmitting 100MB / s, the above study assumed a peak data

bandwidth of about 80Gbps.

The Shannon-Hartley theorem defines the ultimate limit on

channel size, which is given by log(1+SNR) bandwidth times. Bandwidth is also

restricted by the available bandwidth, while because of imperfect antennas, SNR

is limited by the available power on the satellite, background noise, and

channel cross chat. Processing rate is another salient restriction. The new

Xilinx Ultrascale+ FPGAs have up to 58Gbps GTM serial bandwidth, which is a

reasonable approximation without creating custom ASICs for current channel

network performance constraints. Even then, 58Gbps, most likely in the Ka or V

bands, would require a significant frequency allocation. There are more cycles

available in the V band (40-75GHz), but there is more atmospheric absorption,

especially in areas with high humidity.

Will 100 beams be practical? This topic has two aspects:

beam diameter and density of phased array antenna components. The wavelength

divided by the diameter of the antenna defines the beam distance. A digital

phased array antenna is also a specialised technology, but the width of a

reflow oven (around 1 m) is determined by maximal practical sizes as it calls

for difficulty using RF interconnects. The wavelengths of the Ka band are about

a centimetre, indicating a beam diameter of 0.01 rads at half the actual full

width. About 2500 different beams will work in this area assuming a useful beam

solid angle of 1 steradian (similar to the field of view of a 50 mm camera

lens). Linearity means that 2500 beams inside the array will need at least 2500

antenna components, which is feasible, but not easy. When used, it can even get

very warm!

All up, a staggering amount of storage, about 145 Tbps, is

2500 channels each supporting 58 Gbps. Total internet traffic is expected to

average 640 Tbps in 2020, for comparison. For individuals concerned about

inherently poor satellite bandwidth, this is good news. Global internet traffic

may exceed 800 Tbps if the 30,000 satellite constellation is operational by

2026. If 50 percent of this is covered at any point by ~500 satellites over

heavily populated cities, so each satellite will have a peak data rate of

roughly 800 Gbps, 10 times more than our initial simple calculation,

theoretically generating 10 times the revenue.

A 0.01 radial beam occupies an area of 10 sqkm for a

satellite in a 330 km orbit. In this zone, extraordinarily heavily populated

cities such as Manhattan may have 300,000 inhabitants. The overall data demand

is 2000 Gbps if they are all watching Netflix (7 Mbps in HD), approximately 35

times the actual hard cap set by FPGA serial outputs. There are two avenues,

one of which is physically feasible, around this.

The first is to launch such satellites that at any moment

there are more than 35 in the atmosphere over the high demand fields. This

means a satellite density of 0.0002 / sqkm, or 100,000 if uniformly spaced

across the globe, by using 1 steradian again for a rational addressable sky

patch and 400 km for an average orbital altitude. Note that the selected orbits

of SpaceX raise density over the heavily populated 20-40 degrees of latitude

and 30,000 satellites begin to look like a magic figure.

The second suggestion is much cooler, but not probable,

unfortunately. Recall that the diameter of the beam is determined by the width

of the antenna array. Perhaps if, much as radio telescopes like the Very Wide

Array, multiple antenna arrays on separate satellites merged their forces to

synthesise a narrower beam? This solution is fraught with difficulties since it

would be important to precisely track baselines between satellites to

sub-millimeter accuracy in order to maintain the beam process as the satellites

travel about. And even if this were feasible, owing to a lack of satellite

density in the sky, the resultant beam would have incredibly poorly confined

side lobes. The satellite beam diameter could be limited to a few millimetres

in size on the ground (it could detect a mobile phone antenna), but millions of

them would be because of weak intermediate nulling. A representation of the

Thinned-Array Curse is this loss.

It points out that the division of channels by angular

separation as the satellites are scattered across the sky produces sufficient

data rate increases without breaching the laws of physics.

Using cases

What is the Starlink consumer profile? Hundreds of millions

of residential subscribers with a pizza box-sized antenna on their roof are the

usual case for usage, but there are major possibilities for other sales

sources.

Land stations won't need phased array antennas in remote and

rural areas to optimise bandwidth, so smaller consumer terminals are feasible.

This range from IoT asset trackers to satellite pocket-sized tablets, emergency

beacons, or monitoring devices for scientific animals.

Starlink can provide primary and back-up backhaul for

cellular networks in heavily populated urban environments. Every cell tower can

have a top-mounted high-performance ground station, but it can use

ground-supplied electricity for the last mile for amplification and

transmission.

Finally, the extremely low latency provided by VLEO

satellites is used even in congested areas during the initial roll-out. In

order to get critical knowledge just a little faster from any corner of the

world, finance institutions are ready and able to pay top dollar. The vacuum

speed of light is around 50 percent higher than in glass, but the journey taken

by Starlink data is slower because of the hop into space, more than

compensating for the disparity over longer distances.

Impacts

This blog's final segment deals with impacts. Although this

blog is meant to discuss misconceptions around the continuum, some of the more

controversial are the future impacts. It is my goal here to include data

without too much editorialization. I don't have a crystal ball, nor does SpaceX

have any inside details.

For me, the most important consequence is improved access to

the internet. Even my native Pasadena, a thriving, multi-million-strong

technological city that is home to many observatories, a world-class

university, and the largest NASA hub, has very small internet supply options.

Internet companies have been rent-seeking services around the US and the rest

of the world, aiming to skim off their $50 a month in a comfortable,

uncompetitive environment. Any asset that comes out of the housing wall is

arguably a utility, but the standard of internet access is less standardised

than water or power or coal.

The trouble with the status quo is that the internet is

still young and rising increasingly, unlike water , electricity, and gas. The

ways we use it are also changing us. The internet's most transformative use has

not yet been invented yet. Yet the chance of competition and creativity is

choked by "bundled" internet plans. For no other cause than a mistake

of birth, trillions of people are left behind by the internet transition, or their

country is a long way from a major undersea cable. The Internet is already

provided by geostationary satellites at prohibitive prices in vast areas of the

world.

Starlink, spraying parts from the sky constantly, totally

disrupts this model. I don't know a great way to place billions of unconnected

people online. SpaceX is on the road to become a provider of internet content

and, perhaps, rivalling Google and Facebook as an internet business. I

guarantee that you didn't see it coming.

It's not clear that satellites on the internet are the way

to go. SpaceX, and only SpaceX, is in a position to create a vast internet

constellation quickly, and only SpaceX has the ambition of wasting a decade

trying to crack the government-military hegemony of launching space. And if

Iridium had beaten mobile phones to market by a decade, using traditional

launch services, it may never have reached large acceptance. Without SpaceX and

its unique business model, there is a fair possibility that there will actually

never be a global Internet satellite.

Astronomy is the second main influence. There was an

outpouring of criticism from the international astronomy community that a

massively expanded number of satellites would spoil their access to the night

sky following the launch of the first 60 Starlink satellites. There is a saying

that the astronomer 's output is directly proportional to his telescope 's

size. Performing astronomy in the modern era without exaggeration is a super

difficult task, a relentless arms race between improving research and

increasingly increasing light pollution and other sources of noise.

Thousands of brilliant satellites shot through the picture

are the last thing any astronomer needs. In fact, owing to large, mirrored

panels that could reflect the sun's light over small patches of the Earth, the

first Iridium constellation was somewhat infamous for its output of

"flares." They might sometimes be as bright as a quarter moon, and

sometimes even harm sensitive astronomical sensors. There are still legitimate

questions regarding the incursion by Starlink into radio bands used for radio

astronomy.

It is easy to see hundreds of satellites flying overhead on

a clear evening if you download a satellite tracker app. After sunset or before

sunrise, satellites are visible, but illuminating the satellite while the sun's

rays already pass overhead. Later during the night, any overhead satellites are

within the shadow of the Earth and are virtually unseen. They're small,

incredibly far away, and they fly very easily. It's not unlikely for them to

cover a distant star for less than a millisecond, but I guess it would be a

huge hassle to even notice it.

Many of the outcry over Starlink light emission arose

because the plane of the first launch was closely connected to the terminator

of the Planet, ensuring that Europe, which was in autumn, had a clear view of

the satellites flying overhead during the evening sunset. Furthermore,

calculations based on early FCC filings revealed that even after the end of

celestial dusk, satellites circling at 1150 km would be observable. There are

three twilight phases; civil, nautical, and celestial, happening when the sun

is 6, 12, and 18 degrees below the horizon, respectively. The sun's rays are

about 320 km above the horizon at the zenith, just above the atmosphere, at the

close of celestial twilight. Based on the Starlink website, I assume that all

satellites are going to be deployed below 600 km. In this scenario, at dusk,

but not long after nightfall, satellites may be visible, significantly minimising

the possible effect on astronomy.

Orbital debris is the third preoccupation. In a previous

post, I found out that, owing to atmospheric friction, satellites and debris

below 600 km would deorbit within a few years , significantly decreasing the

risk of Kessler syndrome. For trying to launch thousands of satellites, I think

SpaceX gets a lot of hatred, as if their creators never thought of debris. It

is impossible for me to envision a simpler way of doing it for debris

mitigation as I look at the specifics of the Starlink implementation.

At 350 km, satellites are launched, and flown using an

onboard thruster to their target orbit. Instead of lurking at a higher altitude

for thousands of years, any satellite which dies on launch will de-orbit within

weeks. Free entry assessment requires this implementation technique. In

addition, if they lose attitude control, the Starlink satellites are flat in

the cross section, ensuring a significant rise in orbital drag.

It is not well known yet in space launch, SpaceX pioneered

the use of alternate fixtures for frangibolts. In order to launch stages,

spacecraft, fairings, etc., nearly every other rocket supplier uses explosive

bolts, raising the risk for orbital debris. Instead of making them float around

indefinitely, SpaceX often purposefully de-orbits the upper stages, eventually

decaying and disintegrating in the harsh space setting.

The last issue I 'm trying to record here is the possibility

for SpaceX to substitute another monopoly for the current internet monopoly.

SpaceX will already have a complete launch monopoly in a dynamic marketplace.

Just the demand of competing policymakers for guaranteed military access to

space keeps their costly and outdated rockets in service, mostly constructed by

massive monopoly defence contractors.

It can definitely be imagined that SpaceX will launch 6000

of its own satellites annually by 2030, plus a few spy satellites for the sake

of ancient times. The inexpensive and stable satellites of SpaceX will sell

"rack space" for instruments constructed by third parties. Without

needing to pay the cost of constructing the whole satellite platform, any

university that can build a space-rated camera will bring it into orbit. And

Starlink is becoming synonymous with satellites as historical producers

disappear into obsolete obscurity as a result of this pervasive enhanced access

to space.

For far-seeing firms so overwhelming a modern industry,

there is a precedent that their commodity becomes synonymous with the notion.

Hoover.-Hoover. From Westinghouse. From Kleenex. From Google. Frisbee. Xerox.

Kodak. From Motorola.

Where this may become troublesome is where the first

competitor to market is engaging in anti-competitive tactics to protect its

market share, but this is always legal after President Reagan. By pressuring

other constellation developers to fire ancient Soviet rockets, SpaceX could

maintain its Starlink monopoly. The United Aviation and Transportation

Company's related behaviour, as well as price fixing on postal routes,

contributed to its break-up in 1934. Fortunately, SpaceX is unable to hold an

total monopoly on entirely reusable missiles indefinitely.

More worryingly, the deployment of tens of thousands of LEO

satellites by SpaceX could be construed as a common co-option. For private

profit, those previously public, unowned orbital slots will be permanently

occupied by a private company. While it is true that the innovations of SpaceX

provided the mechanism for commercialising the previously unremarkable vacuum,

much of the intellectual capital of SpaceX was built on billions of dollars of

publicly funded research.

On the one hand, to protect the proceeds of private

investment, research and development, we need legislation. Innovators will be

unable to finance ambitious projects without this protection, or will move

their businesses to places that provide that protection. The public suffers

from the loss of the production of wealth in either case. On the other hand, we

need laws to protect people from rent-seeking private entities that would annex

public wealth, the nominal owners of common property, including the sky.

Neither hand, on its own, is correct, or even possible. Starlink 's development

gives us all the chance to find our way to this new market's safe middle ground.

When we've maximised the rate of innovation and common wealth creation, we will

know we've found it.

Final Thought

Launch has been around for a long time, but without

Starlink, Starship, a launcher cheap enough to be interesting, is not feasible.

For a long time , human spaceflight has been around, and if

you're a fighter jet pilot who is a brain surgeon as well, you can go. Human

space exploration has a credible, near-term path from the orbital outpost to

fully industrialised deep-space cities with Starship and Starlink.